I’m fascinated by the city’s visual appearance: How different are different streets or areas in their graphical look?

Letters are ubiquitous in our environments. But the form, color, size and kind of letters are also very different whether one is in a shopping mall, a historical city center or on a highway. Only when being with pre-school kids we are aware that not everyone can read these letters. These kids actually do see letters as what they are: shapes. As soon as they learned reading just seeing shapes, colors, sizes and materials is impossible. The “Urban Alphabets” project aims to bring these aspects back to our perception.

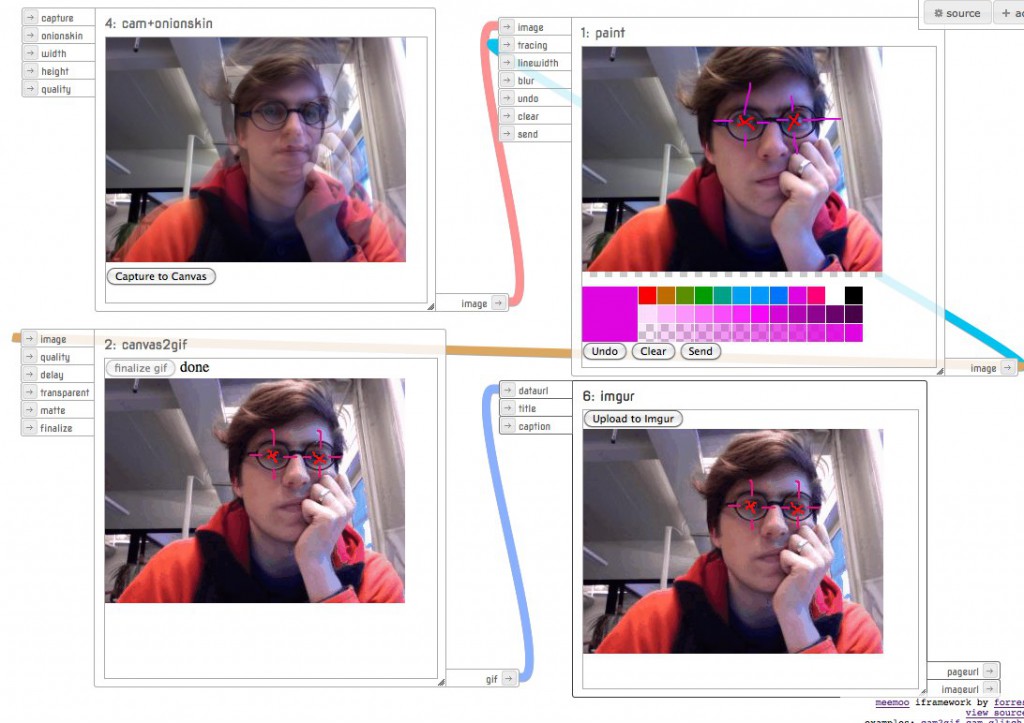

As the process of postproduction has always been too big to make more urban alphabets I started to develop an iPhone app solving this problem during “multitouch interaction” course in December 2012. It enables to create urban alphabets on the go (using camera) but also allows using pictures from the photo library. You can also write your own text, which will be output using the letters you added to the alphabet.

As the first presentation has shown there are many possibilities to use the app also outside the urban context. Letters are everywhere.

As it is so much fun to play with the app I will definitely develop it further. There is the idea to geotag all images taken and use letters from a certain area around when tweeting, sending text-messages,…