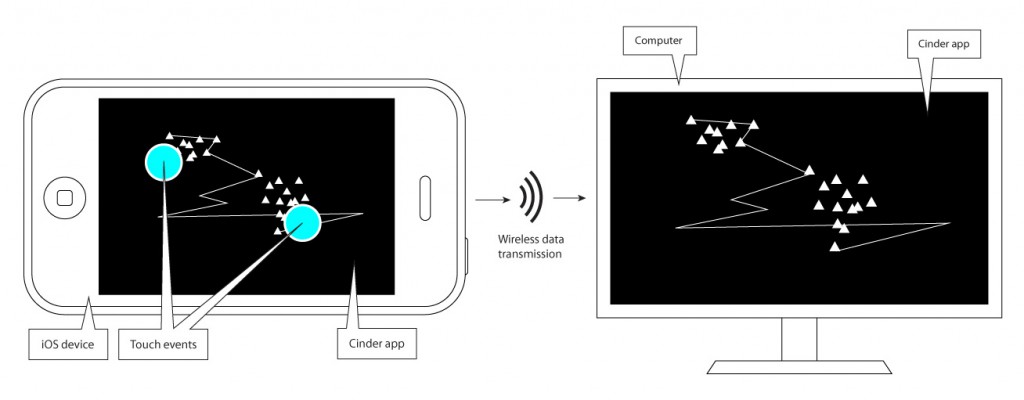

The iOS AV Performer is a generative live visuals application for iPhone, iPod Touch or iPad that can control it’s host application (you can call it the Mac OS AV Performer) via wireless network. The whole solution will consist of two applications (built with Cinder) that will run on two different platforms:

- Generative visuals application that will run on a Mac OS computer (host application)

- Controller application (with similar visual features) that will run on an iOS device (controller application)

OSC protocol will be used for sending and receiving commands and variables. Here is a diagram that shows how it will work:

This kind of application will allow to use multi-touch gestures as input source for creating real-time graphics. It is a more natural way for creating real-time visual feedback as a mouse + keyboard or even regular MIDI controller solution.

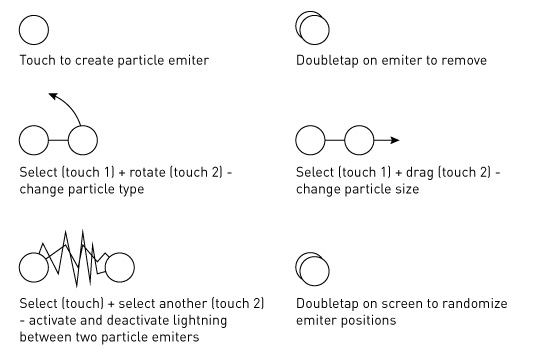

Of course it depends on how you create the actual visual app and the mapping between gestures and functions that generate and control visuals is also important. Here are six basic ways of interacting with the app:

It is possible that I will use the iPhone (iDevice) gyroscope and accelerometer readings for extra effects.