Dec 21, 2009 Comments Off on Designing Interaction with Electronics workshop photos 13.11.2009

Dec 14, 2009 2

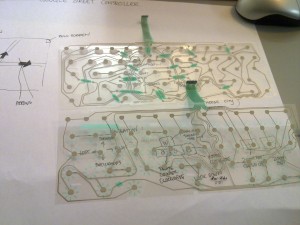

Control surface for Google Maps

By Mr. V & Miriam Lerkenfeld

The aim is to make a more fun and physical experience linked to google street view, where the user through movement controls the direction and view of the web service. Furthermore we wanted to stir away from the traditional keys and make a dynamic and fun way to see new parts of the world: Tokyo, Los Angeles, Paris, you decide where to go!

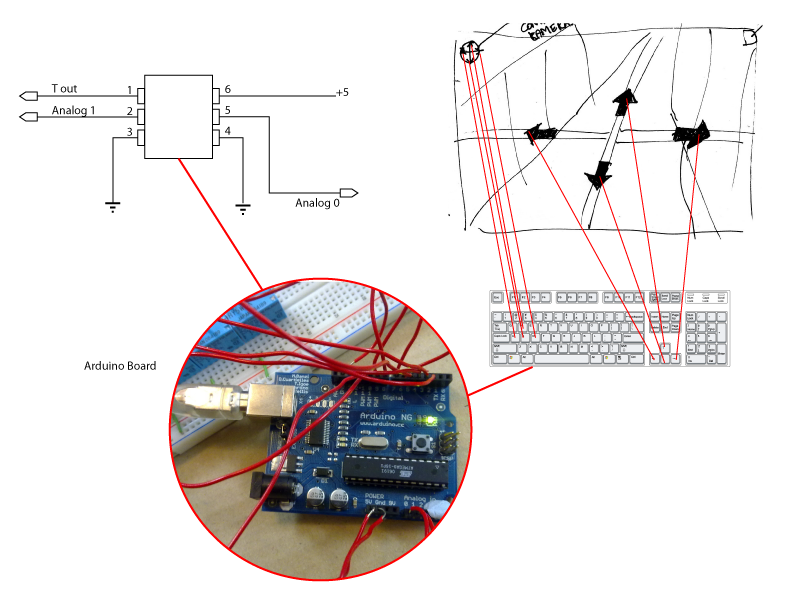

After few different concept ideas, we created an interactive controller for Google Maps. It consists of an Arduino board, an old keyboard, two kinds of sensors; accelerometer sensor – measures tilt and motion, ultrasonic sensors -measures distance and eight switches. Basically, the programme reads the values from the sensors and these control the keyboards’ pre-defined keys. The accelerometer control the camera’s pan and tilt keys(w,a,s,d), whereas, two different sensors (Ping))) ultrasonic sensor) control the horizontal movement in the the street (arrow keys).

Keyboard and sketches

Distance measurement controlling forward, back, left and right

Setup: circuit between arduino and keyboard with the switches

Schematic Drawing of Google Maps project

Dec 3, 2009 Comments Off on Flash player connected to Arduino via Processing

Flash player connected to Arduino via Processing

slide_demo_v2_analogwrite_read

- Socket policy uses port 1843.

- Actual data communication uses port 6666.

Some references to set up a policy server for Flash player.

Nov 13, 2009 2

Cycle Experiment

A.: Wanted to use a MIDI keyboard as the output device hooked up to a bicycle, with a variation in sounds depending on cycing style used. The plan was to trigger events using hall effect sensors detecting magnets connected to pedals and wheels hence rotating past the sensor at speeds specified by the cycler.

Went to the recycling sensor to find a MIDI keyboard. Couldnt find one but found an old exercise bike bought for €5.

The plan now is to get output from cycling action on the exercise bike to manipulate images and sound.

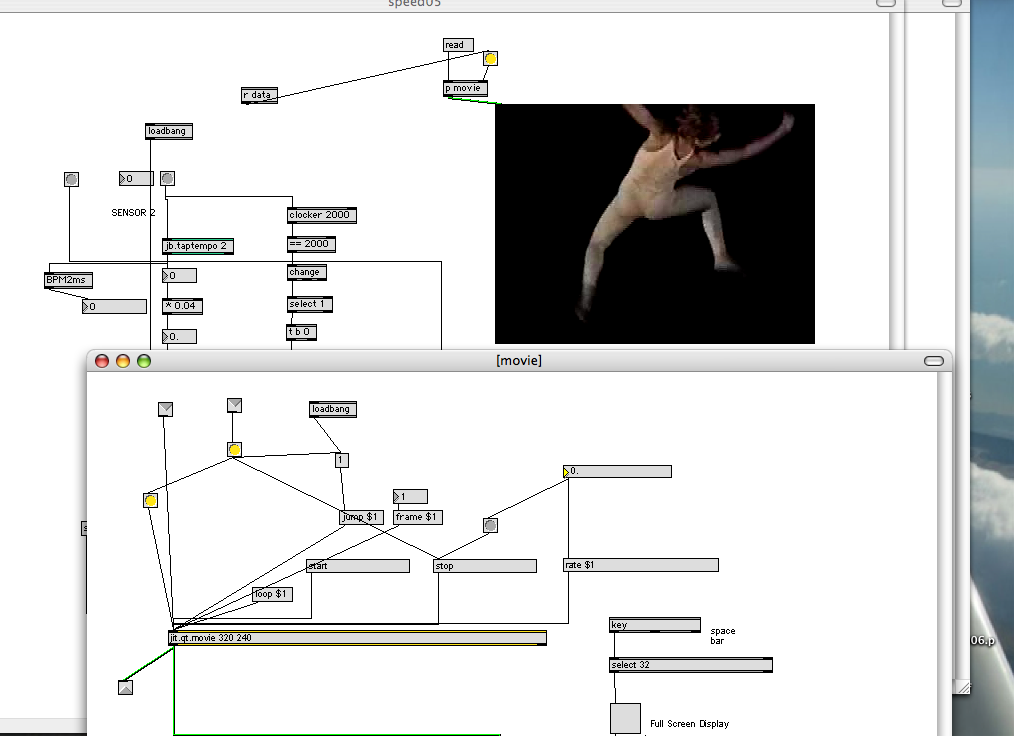

B.: Fitting bike with sensors and creating first schematic using MAX/MSP. We used neodymium magnets, salvaged from broken hard-drives and mounted them on the rim of the rear wheel. As the wheel rotated, the magnets passed through the magnet sensor and output a digital signal via a micro-controller.

The incoming sensor data was sent to Max/MSP and enabled us to determine the number of rotations per second, i.e., the number of times the magnet passed through the hall sensor. From this data, we were able to calculate speed and distance. We also used the rotational sensor data to control the playback rate of the film sequence as well as the audio playback rate. As the cycler pedaled faster, the playback speed increased . The result was a “Cycling DJ” allowing interaction with both the visual and audio environments.

C.: Recorded cycling journey

D.: Callibration of cycling movement and video action.

Max/MSP code. More info email: simon@therealsimon.com

Nov 12, 2009 2

Charlie Interactive Shadow Theatre

Nov 11, 2009 1

Tangible communication

Nov 11, 2009 4

Elle E. Dee & The Electrotastics

Project by Jonathan Cremieux & Juha Salonen

The aim was to create simple and fun controllers for sound and image using diverse electronic components and the Arduino platform.

Nov 9, 2009 1

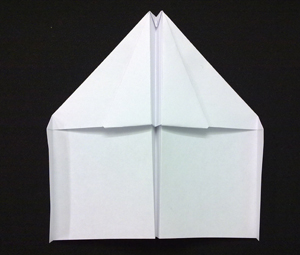

Paper Plane Pilot

Course project by Heidi Holm & Daniel Suominen – Designing Interaction with Electronics workshop 2009 at Mlab, Helsinki

Paper Plane Pilot

Day 1: Developing the idea. A simple game where you pilot a paper plane through the Media Lab. The controlling of the plane involves hand gestures and blowing air from your mouth. This is achieved by using accelerometer and air sensor.

Aug 10, 2009 1

UCIT: The Heatseeker

The heatseeker was a quickly build robot to demonstrate the Parallax Boe-Bot robot system. The robot uses two servo motors to move around and a remote sensing infra red thermometer for measuring temperatures in front of the robot.

The robot seeks heat sources by turning around until the thermometer measures a warmer spot, which makes the robot to move straight towards the spot. If the warmer spot is lost, seeking with turning around starts over.

Heat seeker from Harri Rantala for the UCIT Interaction Design with Electronics Workshop 2009 on Vimeo.

Aug 7, 2009 Comments Off on The UnExpressiveBrush

The UnExpressiveBrush

The UnExpressiveBrush was built to test the capabilities of the ultrasound and acceleration sensors by Parallax. The intention was to build a system where one could simulate painting with a very wet brush. With it one can paint by sprinkling with vigorous brush movements in front of a canvas. However, we cut a few corners and ended up with a system that was significantly less usable than the original plan. First of all, we did not build a paint sprinkling simulation. Instead we used GIMP and its ready-made brushes for the painting. We also did not use the acceleration information from the brush for anything else except sending a mouse down event whenever a certain threshold was exceeded and a mouse-up when acceleration returned to lower values. As a result we had the ability to sprinkle paint with high acceleration movements along one axis and to spread paint by tilting the brush to one direction. The same movements were used to select colors from the palettes available in GIMP. The laptop keyboard was needed for switching windows.

The UnExpressiveBrush from Poika Isokoski and Harri Rantala.

Aug 7, 2009 Comments Off on The SonarHat

The SonarHat

The Sonar Hat consists of a hat with a Parallax Board of Education tied on top. A forward facing PING))) ultrasonic sensor measures distances and a piezo speaker plays a tone based on the distance. The idea was, of course, to see if one could – at least in part – substitute vision by ultrasound navigation akin to what bats do.

The SonarHat from Poika Isokoski on Vimeo.

Aug 7, 2009 Comments Off on Colour Selector from UCIT course

Colour Selector from UCIT course

Here are some details of the colour selector made by Jaakko Hakulinen.

The application maps data from accelerometer to colour and uses LEDs to display this colour. The x-y axis data is converted into polar coordinates and angle is then used as hue and distance as colour intensity. Brightness is always maximum. This HSB value is then converted to RGB values. In addition, touch sensor is used to switch between reading the accelerometer data and controlling the LEDs using PWM.

The system runs entirely on BS2. It does send some debug output, which can be read on PC side.

The colour selector from Jaakko Hakulinen on Vimeo.

Aug 6, 2009 Comments Off on UCIT: Vibrotactile radar using two PING))) ultrasonic sensors

UCIT: Vibrotactile radar using two PING))) ultrasonic sensors

This is quite simple practice work build for the UCIT Interaction Design with Electronics workshop 2009 held by Michihito Mizutani. The work consists of parts. 1) BS2 reads the distance information from two PING))) ultrasonic sensors and sends them through a serial port. 2) A PC running a dedicated PD (Pure Data, http://puredata.info/) patch processes the data and sends audio signals to left and/or right channels depending on the situation. 3) The audio output is then amplified and played through two C-2 vibrotactile voice-coil actuators. 4) The PD patch redirects the distance information to another PC via WLAN TCP connection where it’s been visualized.

Vibrotactile radar using two PING))) ultrasonic sensors from Jussi Rantala and Jukka Raisamo from Univertsity of Tampere, Finland.

Nov 17, 2008 Comments Off on Colour-Tap

Colour-Tap

Concept

To make a simple and emotive communication device. the object consists of a dial that changes colour as the user rotates it. when tapped, the colour gets transformed into another remote device which slowly fades into the tapped colour.

What we made in the workshop

We were able to make a device prototype that had a dial which changed the brightness of the coloured light in the device. when tapped, the device sends the brightness information to another lamp(device) that changes its brightness accordingly.

Components used:

Basic stamp

accelerator sensor

Piezo vibration sensor

Digital to analog converter

RGB LEDs

*Group members: Takahisa Kobayashi, SunHwa Yu, Ramyah Gowrishankar

Nov 17, 2008 Comments Off on The Moth

The Moth

Reetta Nykänen, Michail Kouratoras and Lauri Kainulainen set out to create “something visual with audio”. With very different backgrounds everyone had something unique to offer to the end result which was “Mothman” or just “The Moth”; an interactive audio-visual day/night scene of a forest with a flying moth.

For the visualizations we used Processing (processing.org), for audio we chose Pure Data (puredata.info). Interaction with the piece happened through a Basic Stamp chip connected to an Ultrasonic sensor and an ambient light sensor. The former was for distance measuring (the closer you got the bigger the moth became) and the latter switched between day and night (when it was dark, it was night).

The chip was connected to Processing through a serial/USB-connection. It sent out a four byte variable where only the two first were used; one for distance value and the second for light amount. Processing made visuals based on these and sent out the first unmodified to Pure Data (both running on the same computer) through an OSC server (Open Sound Control). The light variable was converted into a simple binary value to indicate night or day. Based on these Pure Data chose which samples to play and modified them accordingly.

No screenshots here, but the youtube video on the student presentations says it all.

For graphics and code go here: http://lauri.sokkelo.net/files/moth.tar.gz. Sound not included due to huge size.

Nov 7, 2008 Comments Off on 3D Flash game using sensors

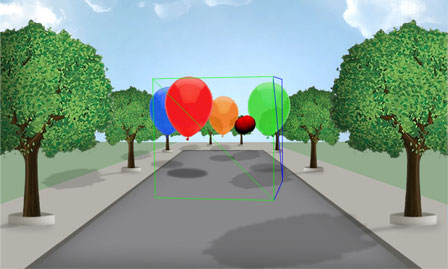

3D Flash game using sensors

Our group (Juha, Henri and Andreas) created a basic shooting game using some sensors, flash and python. The sensors (compass and accelerator-sensors) controlled the movement of the aim, and a pressure sensor controller the shooting. The values from the sensors was sent to flash unsing xml-socket server built with python. The 3D interface was built using papervision 3D 2.0 beta.

Here is an online demo that works using the mouse for movement and the spacebar for shooting. Give the flash player focus by clicking anywhere inside the flash player window, otherwise the space bar won’t be recognized by the game.

The python code for this project will be published later.

Nov 7, 2008 Comments Off on The Doorman

The Doorman

The doorman is a funny little interactive character, who welcomes every visitor to his domain, in this case Medialab. It is based on ultrasonic distance sensor, which detects the distance of the visitor and triggers a speech sample. At this stage, the sensor differentiates five distances, which all have their unique speech response. The doorman is trying to get visitors attention and asks him/her to approach and figure out what message he has.

The sensor is attached to a basic stamp, which sends midi note data through a modified midi cable to a midi interface. Each midi note triggers a different sample in the Kontakt sample player.

The mouth of the doorman is made with three LEDs. The LEDs blink from side to side while sound sample plays. The programming here was really awesome! 🙂

The icing on the cake is doorman’s convincing (detective style) clothes. They invite visitors to a very intimate contact!

Ilkka Olander & Teemu Korpilahti

Nov 6, 2008 Comments Off on CALL The Great Rabbit-Moth-Synth-Man!

CALL The Great Rabbit-Moth-Synth-Man!

DIARY DAY 1:

I will be on time today!!!

…hmmm…

interesting…liquid snow…?

Makes me wonder: What is right kind of interaction & how to trigger that in a human being? Milk wasted is totally wrong … It’s still maybe better used than half of the interactive art pieces that don’t even have a reasonable function to trigger in the first place!!

DAY 2:

Even heart functions like circuits/microprocessors/Helsinki Metro or analog devices…

SIMPLY: I / O

OR +/-

DAY 3:

In the pale light of the moon

I play the game of you.

Whoever I am

whoever you are.

(N. Gaiman, Sandman)

Metaphors:

Moon, solar eclipse > Nosferatu, Noctuide, Nocturne… Underconsciousness

Sun > Butterfly, Day…

Outcome of the workshop:

Provocate your creative underconsciousness: Call the powers, call The Great Rabbit-Moth-Synth-Man!

He is so tame he will sit on your face and flap!

Feb 25, 2008 Comments Off on Presentations from Physical Computing course at Sibelius Academy

Presentations from Physical Computing course at Sibelius Academy

Nov 16, 2007 1

Final Demos from Designing Interaction with Electronics

Mood shoes by Markku Ruotsalainen, Jenna Sutela and David SzauderGimme Sugar by Anna Keune and Jari SuominenGrid Shuffling by Keri Knowles and Abhigyan SinghThe Sixth Sense by Kimmo Karvinen and Mikko ToivonenHugiPet by Juho Jouhtimäki and Elise LiikalaDigital Painting Control by Juho Jouhtimäki, Kimmo Karvinen and Mikko ToivonenTwinkling Gloves by Anne Naukkarinen, Pekka Salonen and Kristine VisanenFurry Modulator by Atle Larsen and Mikko Mutanen