Feb 25, 2008 Comments Off on Presentations from Physical Computing course at Sibelius Academy

Jan 22, 2008 Comments Off on Perrito faldero (Lap dog)

Perrito faldero (Lap dog)

Sound object that interact with the voice.

The aim is to create a metaphor of the digitalization of the sound, continue signal to discontinue signal, and to try to make a machine, which react with the voice’s expressivity.

Diagram:

input sound -> microphone -> audio interface -> computer (max/msp) -> arduino board -> motors -> output sound

Sound: the principally incoming sound is the voice.

Microphone: cardioid headband voice microphone.

Audio interface: it transforms the analog signal in digital information.

Computer (max/msp): It analyzes the information (pitch and amplitude) that comes from the interface with help of the fiddle object (Max/msp). For control and to communicate with the arduino, I use the arduino object.

Arduino board: the information that the analysis gives me, modify the number of motors that are active and the velocity of each one.

Sound object: is a small machine that consists in twelfth motors that are controlled by the input sound (voice). Each motor produces a pulse and repetitive output sound. Three of them have the possibility to control their velocity and others to control only on/off.

Sound: the pulse and repetitive sound is created by each motor with a propeller touching a fix material in each rotation.

Alejandro Montes de Oca

Nov 20, 2007 2

Feather Report

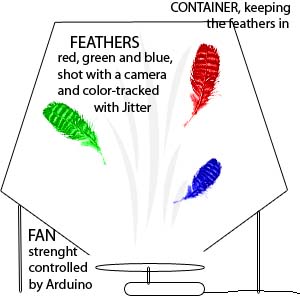

My new project plan goes as follows. I have a fan blowing air into a transparent container with feather of different color (say, red, green and blue). The movement of these feathers is then shot with a video camera or a webcam and color-tracked with Jitter. The data of the X-Y movement of each feather is then mapped to drive sound (2 control parameters per feather = 6 in total). To make it more interesting, the fan should be controlled by Arduino as well. I could drive the fan with a semi-random algorithmic data to make the whole thing a self-containing alive entity or, say, control it with a midi controller for a sound performance. Or the fan could be indirectly controlled by the feathers movement to introduce some feedback to the system. Furthermore, I could make the system more interesting by having several fans to create different turbulent fields.So, how easy is it to control a fan with Arduino? Would the small motors we have be powerful enough to operate as a fan (if I build a fan blade for example from cardboard) or should I buy ready fans?The color tracking works already, I’ve tested it. I should just 1.) obtain a fan 2.) obtain feathers 3.) obtain materials to build a container for the feathers and build it, 3.) figure out how to control the fan with Arduino and 4.) build a sound-making instrument and map the paramaeters in a musical way.Comments, suggestions?

My new project plan goes as follows. I have a fan blowing air into a transparent container with feather of different color (say, red, green and blue). The movement of these feathers is then shot with a video camera or a webcam and color-tracked with Jitter. The data of the X-Y movement of each feather is then mapped to drive sound (2 control parameters per feather = 6 in total). To make it more interesting, the fan should be controlled by Arduino as well. I could drive the fan with a semi-random algorithmic data to make the whole thing a self-containing alive entity or, say, control it with a midi controller for a sound performance. Or the fan could be indirectly controlled by the feathers movement to introduce some feedback to the system. Furthermore, I could make the system more interesting by having several fans to create different turbulent fields.So, how easy is it to control a fan with Arduino? Would the small motors we have be powerful enough to operate as a fan (if I build a fan blade for example from cardboard) or should I buy ready fans?The color tracking works already, I’ve tested it. I should just 1.) obtain a fan 2.) obtain feathers 3.) obtain materials to build a container for the feathers and build it, 3.) figure out how to control the fan with Arduino and 4.) build a sound-making instrument and map the paramaeters in a musical way.Comments, suggestions?

Nov 16, 2007 1

Final Demos from Designing Interaction with Electronics

Mood shoes by Markku Ruotsalainen, Jenna Sutela and David SzauderGimme Sugar by Anna Keune and Jari SuominenGrid Shuffling by Keri Knowles and Abhigyan SinghThe Sixth Sense by Kimmo Karvinen and Mikko ToivonenHugiPet by Juho Jouhtimäki and Elise LiikalaDigital Painting Control by Juho Jouhtimäki, Kimmo Karvinen and Mikko ToivonenTwinkling Gloves by Anne Naukkarinen, Pekka Salonen and Kristine VisanenFurry Modulator by Atle Larsen and Mikko Mutanen

Nov 13, 2007 Comments Off on Furry Modulator

Furry Modulator

…………………………

-Playing on air

A Spatial Instrument

-a variation of the famous ‘Theremin’ by the Russian inventor Léon Theremin (1919)

Inputs

-distance

-sectors in the visual field

-ripple gestures

Processing

-movement through the sectors and steps builds up a sequence

-making a ripple gesture triggers a chain reaction of samples in the stack

-also a single sample can be triggered

-distance affects the volume and pitch of the ambient background tone

-a combination of distance and spatial grid selects a sample from the pool

-temporal effects on volume and filtering for the samples in a chain reaction

Outputs

– MIDI events into Reason (possibly)

– samples loaded inside PD

– stereo sound through loudspeakers

Interface

Bodily movement in space for a single person using 2 hands and ripple gestures controlling speed, pitch and the triggering of (stacked) sample sequences.

Nov 12, 2007 Comments Off on gimme sugar

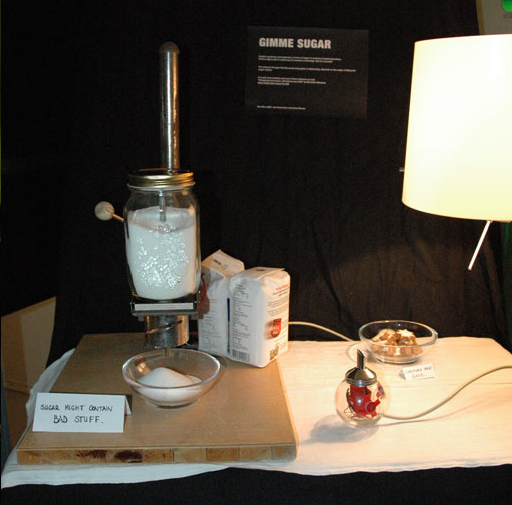

gimme sugar

Idea: a gesture controlled sugar dispenser.

Depending on the angle of tilting an object, shaped like a sugar shaker, a bowl placed elsewhere is opening and dispensing sugar. The more you shake/tilt the object the more sugar will be dispensed. This could be a fun way to sweeten a beverage or represent how much attention in form of sugar one gives to a significant other.

Nov 12, 2007 Comments Off on Grid Shuffling

Grid Shuffling

Proximity sensors or touch sensors will be arranged behind a horizontal panel controlling a grid of objects (for example, images) displayed on a computer screen.

There will be intutive mapping between the objects on the screen & the physcial panel on the table. User can select an object, move it to another location & place it on the new location using his/her hand’s gestures & movement over the panel. He would also be able to see simlutaneous reflection of change on the computer screen.

Working:

A section of the panel senses if an object is to be selected by the hand being at the closest proximity. By making the gesture of grabbing and pulling away, the increased distance from the area registers that the object in the section is selected and being moved, causing the other sections to switch into a receptive state. The object may be dropped in any of the other sections, swapping it with the previous one, and possibly reshuffling the whole arrangement.

Team: Keri & Abhigyan

Nov 12, 2007 Comments Off on Mittens AKA Twinkling Glove(s)

Mittens AKA Twinkling Glove(s)

First we played with facial expression detection and so forth. Taping sensor to for example to the eye brows to see that amount of surprise from their angle and position. Basically lots of tape in the face.One would express emotions etc with lights. You’d have the light bulb head for those Eureka moments.We ended up going with pairs of mittens. Lots of possibilities there.0 hands mode (mitten alone):

- Thermometer, mitten alerts when it’s cold: take me with you!

Oct 30, 2007 2

Hands on work started today

Oct 21, 2007 Comments Off on Components can be connected to Puduino and Maxduino

Components can be connected to Puduino and Maxduino

I made a list of the components can be used with Pudino and Maxduino patch but not all of them have been tested. I will update when I found new components compatible with the patches.

Analog In

-

– AD592 Temperature Probe

– Photoresistor (Available from your local component shop)

– Flexiforce Sensor Demo Kit

– Sensors from I-Cube Sensor Products

Digital In

-

– QTI Sensor

– PIR Sensor

– Piezo Vibra Tab

– QT113-D Touch Sensor

– Magnetic switch (Reed Switch, available from your local component shop)

Analog Out

-

– LED (Available from your local component shop)

– DC motor (Available from your local component shop)

Digital Out

-

– LED (Available from your local component shop)

– DC motor (Available from your local component shop)

Oct 19, 2007 1

Continuous Motion, Discrete Signal

The concept involves defining discrete regions of space between 2

‘pads’ (preferably small patches that can be attached to the body). Data is controlled in intervals by the proximity between 2 objects increasing and decreasing.

For instance, instead of the continuous stream of data from a source

such as a Theramin, there would be defined regions of space which

would trigger a discrete sequence of a defined (musical) scale.

The user would not be touching the pads but the signals would be triggered by the pads moving closer to one another or farther apart. One scenario is that a person would have one attached to their hand and one to the top of their foot – and there would be a range of intervals that would be triggered between the closeness of the hand and foot.

Working Plan / Technical Solutions

The proximity sensor, PING Ultrasonic, communicates with BASIC Stamp and MAX/MSP via Arduino Bluetooth.

A program defines the distinct regions between the pads.

Oct 16, 2007 Comments Off on HanaHana

HanaHana

Oct 16, 2007 1

Mat Synthesiser

A “foot controller†of audio synthesis. Sort of “track pad†sensible to the position and pressure to be played by the foots.

Technical solutions : Big Pressure sensors (5 or 6) can be placed under a “mat†flat surface (20cm, 20cm) to give information in an axis system (x y z?) for MAXMSP or PD.

Oct 16, 2007 1

Soup sounds

Preliminary plan:

In short the idea is to use chemical reactions as control data. Here the key question is: what kind of parameters can one measure in a liquid? A few that have come to my mind: salt level (conductivity?), temperature etc. I’m sure there are others…

This control data would be mapped to generate and/or modify sound. The idea is to create a performative composition with little direct user intervention, although it might be necessary to work as a cook and mix in different ingredients during the performance and change the temperature of the substance.

Oct 15, 2007 5

Soundtrack of our life

The working title title for my project is “soundtrack of our life”. The idea for this project derives for one of my earlier works. A sound installation for an mp3 player: where the viewer/ listener was offered an ipod set to play a huge amount of various samples on random. Changing the experience of the surrounding by changing the sound scape. This time i would like it to be possible for the sound to change because of the listeners actions/movements.

Sensor input would change the sound. Ideally the user should be able to carry the set around.

Technical solutions are something where i only have some vague ideas at the moment. I do think that i need a sound source, sensors and a micro-controller that communicates with them both.

Oct 15, 2007 2

Robotic Orchestra

As project for the physival computing course I´d like to realize a “robotic orchestra”. The basic idea is to control several motors that are connected to the computer via an Arduino board. The actions of these motors is controlled creating a Pure Data patch. The motors serve like “real world players”. They can play and “bang” respectively on all kinds of objects according to the control data they receive from the host software. Digital bits and bytes are transferred into real motions.

I´ve seen a couple of people using solenoid motors for similar purposes, so this might be one direction to start with.

Technical requirements:

1.) arduino board

2.) motors (solenoid?)

3.) pure data

Oct 14, 2007 1

I Can

The working title of my project is I Can. This might be regarded as the can equivalent of I, Claudius, in the sense that it has something to do with I-dentity. The idea is to make a musical instrument out of a beer can and discover what its potential for sonic performance might be (er). If a performance is forthcoming, it will surely have something to do with the metaphor of ease vs. effort, i.e. the I Can constrasting with the I Cannot. In any case, the can will have to have some life of its own, otherwise it will not be its own I.

I would like to put a wireless Arduino inside, along with a battery box, some sensors and a servo motor to make the beer can shake (rhythmically?) on command from the remote computer. What sensors CAN I put inside? Perhaps touch, velocity, orientation, a microswitch to detect whether the ring cap is in place or lifted.

What sounds will I control with it? Maybe Offenbach’s Can-Can. OK, as yet I have no idea.

The programming of the beer belly to go with the instrument is another long term project. More on that when I know how many beers I had to drink before I found the ideal can for the prototype.

Oct 10, 2007 3

Nickname: persona

Short description:

Wondering today about how new ways of communication, allowed by our present time technology, affect human identity transformation and construction in the net and how they can cause physical feeling and human energy perception to be lost or experienced in a new manner, nickname: persona project is an interactive audiovisual installation where people is obligated to act to make the piece possible and wants to make them wonder about the kind of relationship established between them and how they are showing their identity to the others;

The work would consist of taking data from visitors’ bodies by sensors, which would manipulate the sound and taking snapshots of their faces to build a new face with all of them. Sound and image would be streamed to a website.

Development (working plan):

During the following three month:

1. Make some tests with sensors to experience the kind of data that we can receive from the human body and decide the kind of relation that it can be established between them and the sound of the installation.

2. Make some test about the disposition of the sensors and how the visitor must use them.

3. Decide the shape of the presentation of this part of the project and also the whole project output.

Technical solutions:

Sensors (undefined)

Arduino

Max/MSP

Questions:

Are there some sensors that I could test before buying them? I’m interested in blood pressure, body temperature, sweat, heat beating, nervous system… any other ideas?

Sep 23, 2007 Comments Off on Sound visualization using fire.

Sound visualization using fire.

This is very interesting sound visualization.

Ruben’s Tube (wikipedia)

Sep 20, 2007 Comments Off on Maywa Denki Edelweiss Series

Maywa Denki Edelweiss Series

Maywa DenkiOfficial site

Edelweiss Series Video